Introduction

The auto trade has at all times been on the forefront of innovation, continuously looking for methods to enhance effectivity, security, and buyer satisfaction.

Predictive modeling provides a brand new dimension to this effort by enabling data-driven choices that profit producers, insurers, and shoppers.

On this challenge, we give attention to predicting normalised losses for autos, an important metric for assessing vehicle threat and figuring out insurance coverage premiums.

Normalised losses are standardised metric that quantifies the relative threat of a automobile incurring insurance coverage losses.

This text leverages numerous machine studying fashions to offer correct and actionable predictions.

Vehicle insurance coverage dataset

Normalised losses are usually calculated primarily based on sure necessary knowledge similar to historic claims knowledge, adjusted to account for various components similar to restore prices, automobile options, and accident frequency.

This permits for constant comparability throughout completely different automobile fashions.

The dataset contains 206 rows and 26 columns, providing complete insights into numerous autos. It consists of technical specs, insurance coverage threat scores, and normalised loss values, offering a strong basis for evaluation.

To entry the dataset please go to the next hyperlink, which incorporates an in-depth article detailing a previous exploratory evaluation performed on the auto dataset.

Goal of predictive modelling

On this challenge, we goal to foretell normalised losses utilizing numerous machine studying fashions, together with Linear Regression, Ridge Regression, ElasticNet, Random Forest, Gradient Boosting, and Assist Vector Machines (SVM).

The principle steps to realize this goal embrace:

- Knowledge Preprocessing

- Mannequin Choice

- Mannequin Analysis

- Hyperparameter tuning

- Function Significance

Knowledge Preprocessing

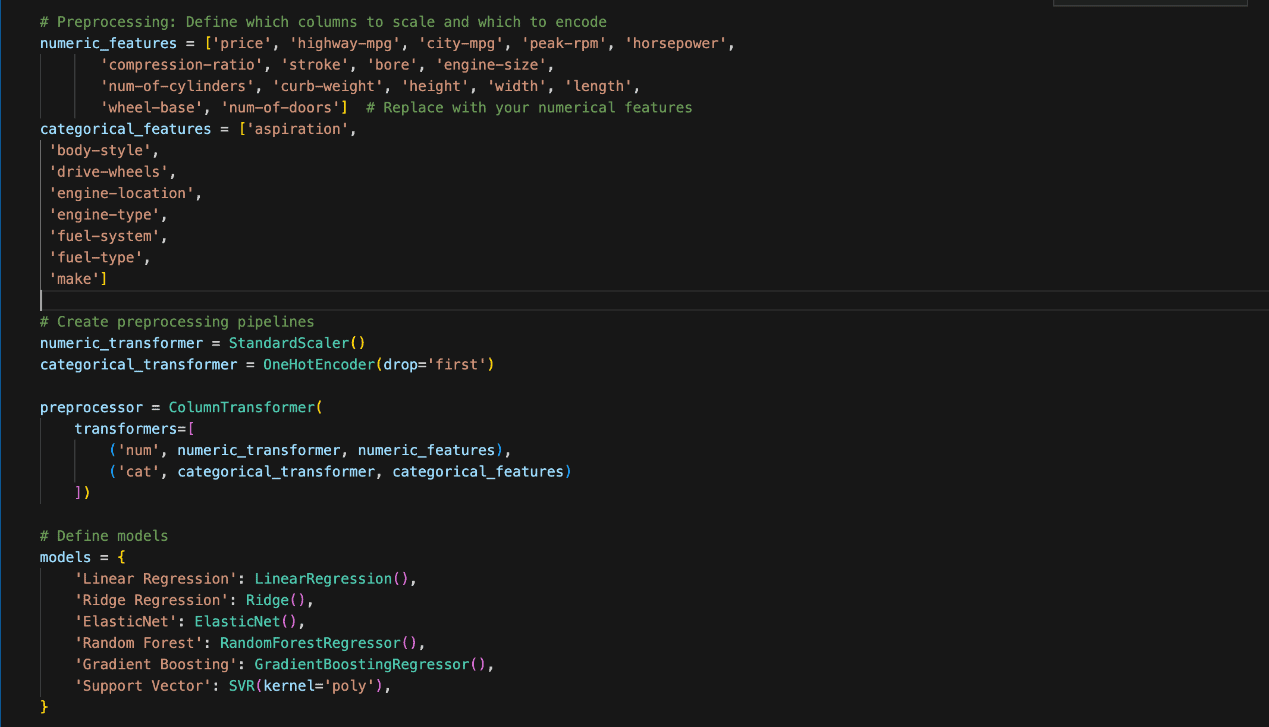

Preprocessing is important for getting ready the dataset earlier than making use of machine studying fashions. The Python coding within the determine under was used.

The options had been divided into two classes, specifically Numeric and Categorical.

The numeric options embrace values similar to ‘worth’, ‘horsepower’, and ‘engine-size’. We scaled them utilizing StandardScaler to make sure all numeric variables have the identical weight when fed into the fashions.

Alternatively, the specific or non-numeric options embrace ‘aspiration’, ‘body-style’, and ‘fuel-type’.

Categorical knowledge was reworked utilizing OneHotEncoder, which converts them into binary columns with 1 representing the presence and 0 representing the absence of every class.

Mannequin Choice

A number of algorithms may be utilised within the prediction of normalised losses within the vehicle insurance coverage enterprise.

Nevertheless, the efficiency of those algorithms will differ relying on the character of the dataset and the precise drawback to be tackled.

Subsequently, you will need to check out a number of algorithms and evaluate them primarily based on sure analysis standards to pick the most effective one whereas additionally aiming to stability complexity and interpretability.

Beneath are the algorithms thought of and explored.

1. Linear Regression

Linear Regression is without doubt one of the easiest machine studying fashions. It tries to discover a straight line (or hyperplane) that most closely fits the info.

The thought is that the goal variable ‘y’ (like ’normalised-loss’`) may be expressed as a linear mixture of the enter options ‘x’ (like’`worth’,’`horsepower’, and many others.). Study extra about Linear Regression right here.

The purpose of Linear Regression is to minimise the error between the expected and precise values. The error is measured utilizing the imply squared error (MSE).

2. Ridge Regression

Ridge Regression is like Linear Regression however with a penalty for giant coefficients (weights). This helps stop overfitting.

Math is nearly the identical as Linear Regression, but it surely provides a regularisation time period that penalises giant weights.

Study extra about Ridge Regression right here.

3. Random Forest Regressor

Random Forest is an ensemble methodology that mixes a number of Choice Timber. A call tree splits the info into smaller teams, studying easy guidelines (like “if worth > 10,000, predict excessive loss”).

A Random Forest builds many choice bushes and averages their outcomes. The randomness comes from:

– Choosing a random subset of knowledge for every tree.

– Utilizing random subsets of options at every cut up.

Every tree makes its personal prediction, and the ultimate result’s the common of all tree predictions.

Essential ideas:

– Splitting Standards: In regression, bushes are often cut up by minimising the imply squared error (MSE).

– Bagging: This implies every tree is skilled on a random subset of the info, which makes the forest extra sturdy.

Extra about Random Forest right here.

4. Gradient Boosting Regressor

Gradient Boosting is one other ensemble methodology that builds choice bushes. Nevertheless, not like Random Forest, every tree learns from the errors of the earlier one. It really works by becoming bushes sequentially.

The primary tree makes predictions, and the subsequent tree focuses on correcting the errors made by the earlier tree.

Find out about Gradient Boosting Regressor right here.

5. Assist Vector Regressor (SVR)

Assist Vector Regressor tries to discover a line (or hyperplane) that most closely fits the info, however as an alternative of minimising the error for all factors, it permits a margin of error. SVR makes use of a boundary the place it doesn’t care about errors (a margin).

SVR tries to stability minimising errors and preserving the mannequin easy by solely adjusting predictions outdoors this margin.

6. ElasticNet

ElasticNet combines the concepts of Lasso Regression and Ridge Regression. Like Ridge, it penalises giant coefficients but in addition like Lasso, it may well scale back some coefficients to zero, making it helpful for characteristic choice.

ElasticNet is sweet when you may have many options and need each regularisation and have choice.

Mannequin Analysis

A number of the extra generally recognized mannequin analysis strategies or metrics used on this challenge are RMSE, MSE, and R-squared.

Splitting the dataset into coaching and take a look at units is an analysis methodology used earlier than the primary mannequin is even constructed.

By setting apart a portion of the info because the take a look at set, we be certain that the mannequin is evaluated on unseen knowledge, offering an early and unbiased estimate of how effectively the mannequin will generalise new knowledge.

After experimenting with completely different algorithms utilizing the take a look at cut up ratio, the next efficiency metrics had been used to match the regression fashions on an equal footing:

Imply Squared Error (MSE):

MSE measures the common squared distinction between the precise and predicted values.

A decrease MSE signifies a greater match, but it surely’s delicate to outliers.

Root Imply Squared Error (RMSE):

The RMSE is the sq. root of MSE, and it’s helpful as a result of it’s in the identical items because the goal variable.

Imply Absolute Error (MAE):

MAE measures the common absolute distinction between the precise and predicted values.

It’s much less delicate to outliers than MSE.

Support authors and subscribe to content

This is premium stuff. Subscribe to read the entire article.